- #KITEMATIC STANDALONE HOW TO#

- #KITEMATIC STANDALONE INSTALL#

- #KITEMATIC STANDALONE DRIVER#

- #KITEMATIC STANDALONE DOWNLOAD#

Spark on Yarn 使用Docker Container Executor處理任務 … On SPARK, I started the master ser As the OP mentioned in comments, he’s running container by docker run -it ubuntu with no port mapping. On top of that Ubuntu image, I have installed Spark 3.1.1 and Hadoop 2.7.

On Docker I have installed an Image of Ubuntu. On Ubuntu 18.04, I have installed Docker.Ubuntu 18.04: Docker: SPARK: Web UI not found Standalone Spark cluster mode requires a dedicated instance called master to coordinate the cluster In this post we will cover the necessary steps to create a spark standalone cluster with Docker and docker-compose.

#KITEMATIC STANDALONE DRIVER#

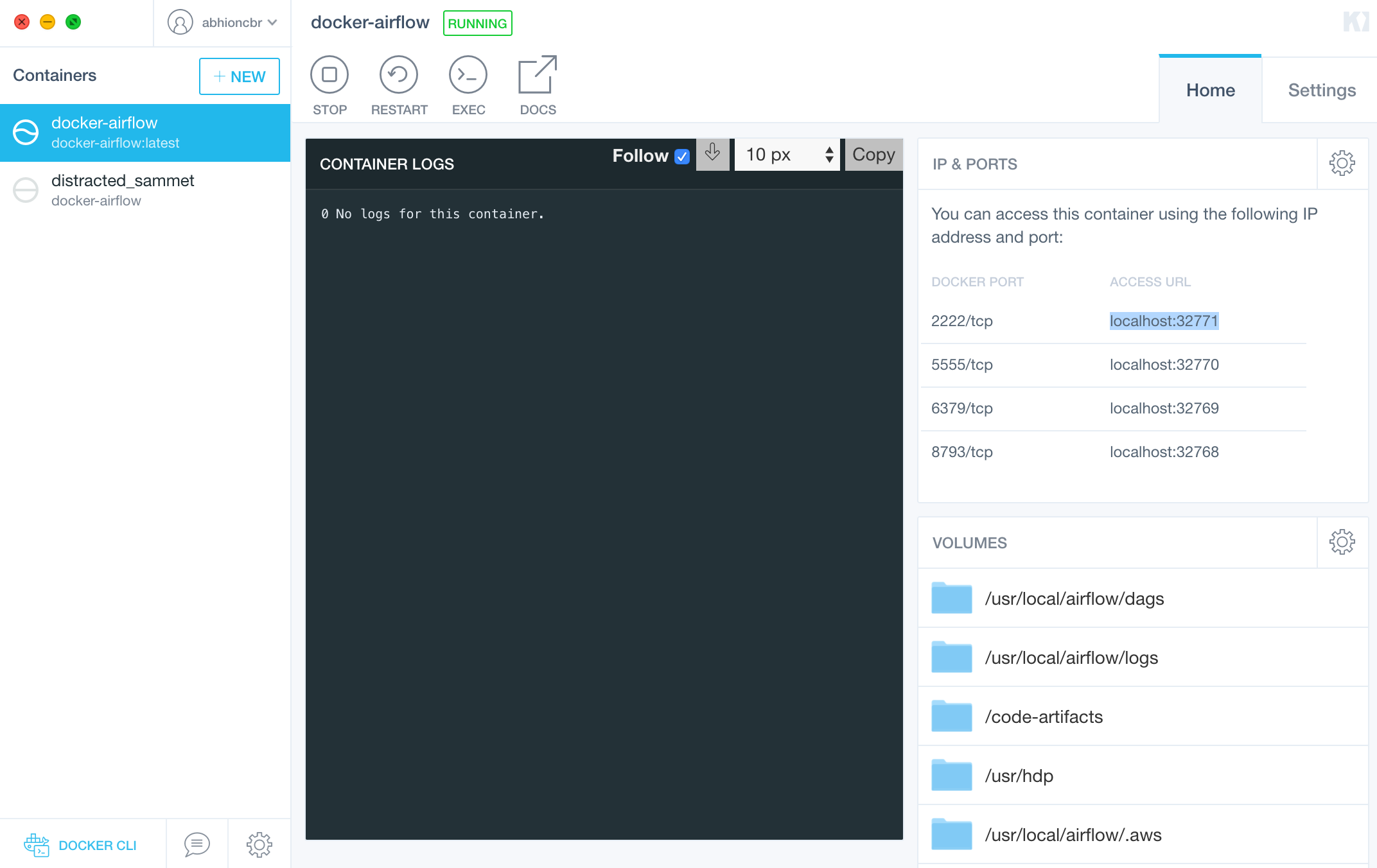

Running Spark driver program in Docker containerĪctionml/docker-spark: Apache Spark docker container, Apache Spark docker container image (Standalone mode). Also, docker is generally installed in most of the developer’s Motivation In a typical development setup of writing an Apache Spark application, one is generally limited into running a single node spark application during development from a local compute (like laptop). By the way, I’m not gonna explain here what does the BranchPythonOperator and why there is a dummy task, but if you are interested by learning more about Airflow, feel free to check my course right there. The second one is where the DockerOperator is used in order to start a Docker container with Spark and kick off a Spark job using the SimpleApp.py file.

#KITEMATIC STANDALONE HOW TO#

How to use the DockerOperator in Apache Airflow This previously not so easy thing to do is now completely doable.

#KITEMATIC STANDALONE INSTALL#

Install Docker on Ubuntu Log into your Ubuntu installation as a user with sudo privileges. The repository contains a Docker file to build a Docker image with Apache Spark.

) with Spark 2.1.0 and Oracle’s server JDK 1.8.121 on Ubuntu 14.04 LTS operating system:ĭocker and Spark are two technologies which are very hyped these days. Here is the Dockerfile which can be used to build image (docker build. Running Apache Spark standalone cluster on Docker For those who are familiar with Docker technology, it can be one of the simplest way of running Spark standalone cluster. Within the container logs, you can see the URL and port to which Running Apache Spark 2.0 on Docker

#KITEMATIC STANDALONE DOWNLOAD#

When you download the container via Kitematic, it will be started by default. Setting up and working with Apache Spark in Dockerĭownload Apache Spark for Docker Once your download has finished, it is about time to start your Docker container.

0 kommentar(er)

0 kommentar(er)